I was recently asked, how can I evangelise NetZero & sustainability yet be happy to use AI (mostly Copilot) many times per day given that data centres use above 2% -3% of the world’s electricity?

While asked in jest, it’s a valid question, so Copilot and I did the maths…

Energy use per query

A typical search query on Google uses about 0.0003 kWh of energy (0.3 watts), whereas a query with an AI model similar to Copilot is 0.003 kWh per query. A factor of 10 higher!

However, it often takes 5 or more Google searches to gather the information from a single, well formed AI query.

Companies like Microsoft get almost all their electricity from renewable or carbon neutral supplies, and actively invest in these.

Measuring energy and water efficiency for Microsoft datacenters

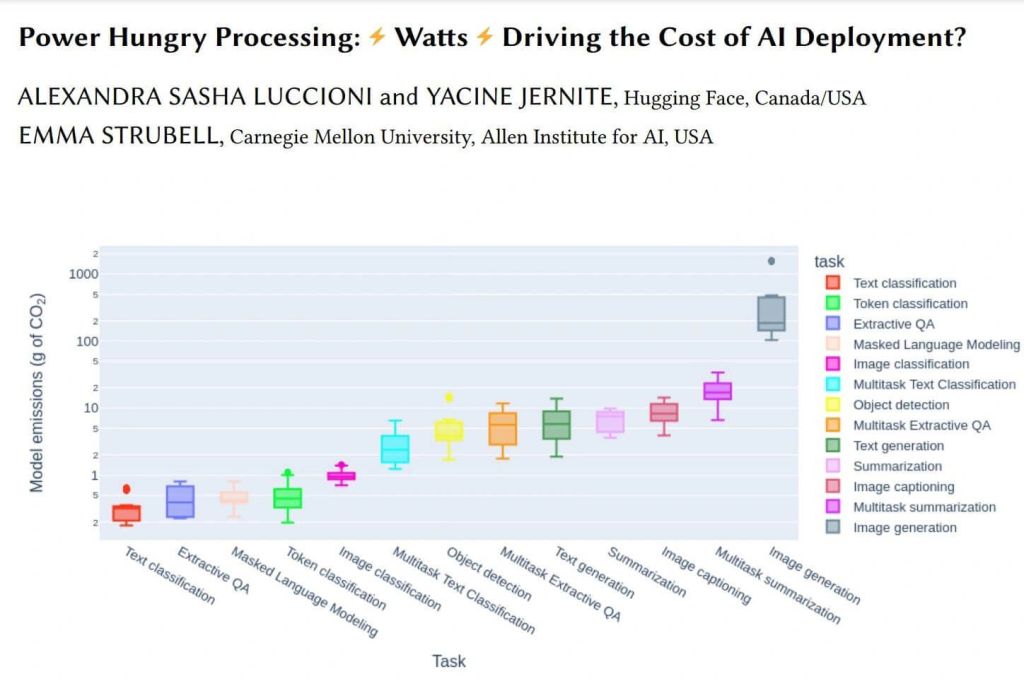

It’s not quite as simple as that. More complex queries, whether search or AI consume different amounts of electricity. Note the log scale on this chart.

Establishing the baseline

Even more to the point, while data centre use is expanding, partly driven by AI, local IT use (on premises server farms) has fallen enormously.

Local server farms have a remarkably poor use of resources and most of the organisations using them were not choosing renewable electricity. The scale of this inefficiency and consumption was largely hidden as it was dispersed over tens of thousands of corporate and public sector locations and accounts.

The Baseline: Global Data Centre Energy Use

Recent international estimates indicate that today’s consolidated data centres consume roughly 200–340 terawatt‐hours (TWh) per year.

In many studies—including work by the International Energy Agency (IEA) and academic research—the figure for non‐cryptocurrency data centres comes in around 200–205 TWh, which corresponds to roughly 1–1.3% of global electricity consumption . These figures largely reflect modern “hyperscale” facilities that benefit from deliberate design choices, advanced cooling, optimized server utilization, and overall economies of scale.

Nevertheless, AI workloads are rapidly reshaping the data centre landscape. Currently, ~33% of global data centre capacity is dedicated to AI; this is expected to reach 70% by 2030. The demand for AI-ready data centres is soaring, with about 70% of future data centre growth projected to be for advanced AI workloads.

What if Every Organisation Ran Its Own On‑Premises Server Farm?

When individual organisations run their own server rooms or small data centres, they typically lack the design innovations and operational focus on efficiency that big cloud providers have developed. Some key inefficiencies include:

- Lower Utilisation: On‑premises systems often run below full capacity, wasting energy on hardware that isn’t used to its potential.

- Inefficient Cooling and Power Distribution: Whereas hyperscale centres boast cutting‑edge cooling systems and power management (often achieving Power Usage Effectiveness or PUE values near 1.1–1.2), many smaller on‑premises sites operate with PUE values in the range of 1.8–2.0 or even higher. (PUE is the ratio of total facility energy to IT energy—so higher values reflect more energy “waste” in cooling and ancillary systems.)

- Redundancy and Legacy Overheads: Small-scale facilities may not implement the same rigour in energy-saving upgrades or share improvements across many units.

If we compare a “best‑in‑class” hyperscale data centre with a typical on‑premises server farm, the ratio of energy efficiency can be estimated roughly by comparing PUE values. For instance, if a hyperscale centre runs at a PUE of about 1.2 and an on‑premises server farm averages 2.0, then for the same computational workload the on‑premises approach would require almost 70% more energy. In many cases, especially among smaller or older facilities where PUE values can be even higher (or where utilisation is even lower), the factor might well approach 2 to 3 times the energy consumption when compared to a well‐optimized centralised facility.

Putting the Numbers Together

- Current Centralised Scenario:

Global energy use by hyperscale data centres is about 200–340 TWh per year. - Distributed On‑Premises Scenario:

If the same computing demand were met by countless individual on‑premises server farms—with typical inefficiencies multiplying energy needs by roughly 1.7 to 3 times—the overall global energy requirement could then be in the range of about 400–800 TWh (or even more) per year.

Consolidating IT workloads into efficient, large-scale data centres, has saved the world 50% or more energy compared to every organisation running its own servers with the typical on‑premises overheads.

Beyond the Numbers

While these numbers offer a rough estimate, the exact multiplier is highly sensitive to:

- The specific PUE (and other efficiency metrics) of on‑premises installations versus hyperscale centres.

- The degree to which on‑premises systems can be modernised versus the inevitable inefficiencies of smaller-scale operations.

- Variations in workload types and the impact of advanced technologies (like virtualization and AI acceleration) that also influence efficiency.

- Where the companies choose to source their electricity from.

These factors mean that while our estimate of a 2–3× higher energy footprint for a distributed on‑premises model is reasonable, global energy varies by region and over time as more organisations adopt energy‑efficient practices.

Take aways

Energy consumption by AI data centres is a valid concern; however, one that is almost certainly overblown compared with the baseline alternative, and conveniently ignores other cloud resources people use all day long without a second thought.

Today’s hyperscale data centres—roughly consuming 200–340 TWh per year—are likely achieving these numbers at only about half (or even less) of the energy that would be required if similar computing workloads were handled by many discrete on‑premises server farms. This consolidation not only reduces overall energy consumption but also brings significant environmental and cost benefits.Th

This notwithstanding, data centre use is projected to continue to increase.

What we should do about it

Demonising AI or data centres without understanding the data and how our individual behaviour contributes to energy consumption is illogical. Our modern world runs on technology and advanced in this are helping to address more climate change issues than they cause. Energy use isn’t a problem, as long as that energy is from renewables or zero carbon sources.

Ultimately, if people are concerned about the environment they should be making their own solar PV etc investments since they are good for the environment and one of the best financial investments anyone can make.

As for me, my home solar array exported enough electricity to power 8600 #AI queries yesterday. That’s about 2 years worth at my current use.

You’re welcome 😁

Sources

Search Engines vs AI: energy consumption compared

https://www.hable.co.uk/news/using-generative-ai-in-an-environmentally-friendly-way

https://thenetworkinstallers.com/blog/ai-data-center-statistics/